Boom! Alibaba Researching on EMO

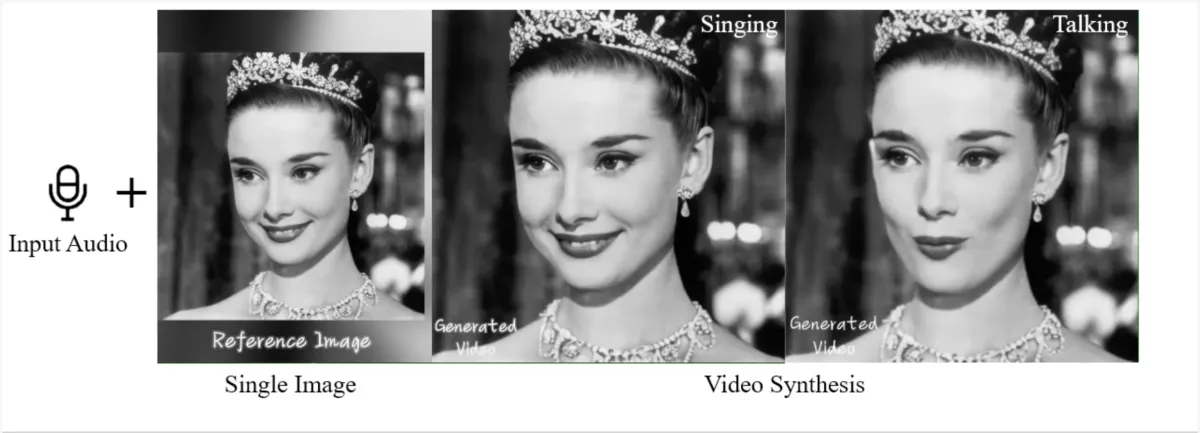

After Sora's excitement, now Alibaba Group, specifically the Institute for Intelligent Computing, is publishing the results of its research, namely EMO: Emote Portrait Alive, Generating Expressive Portrait Videos with Audio2Video Diffusion Model under Weak Conditions.

EMO can produce photos that speak or sing according to the audio source provided. It might sound normal, because there are many applications that can make a photo talk. However, what's even crazier about EMO is that the photo doesn't just mean talking or moving lips, it can also show facial expressions, eye movements and eyebrows so that it looks more real.

AI Mona Lisa Singing Flowers

Based on information from the official EMO page, currently EMO can produce photo videos singing in various languages and portrait styles.

Rapid Rythm, apart from producing photos singing slow songs, can also produce photos singing with a fast rhythm, for example rap singing.

KUN KUN rap singing

You can visit EMO page through this link.

So, it looks like we will be flooded with a tsunami of generated content in the coming years, what do you think?

Please subscribe for free to get more content.

Comments ()